Justice and hope bloom in the wild world of online mental health

Regulatory action and public pressure could be s-l-o-w-l-y pushing the industry towards sanity.

Which modern healthcare invention is guilty of brazen abuse of the safety and trust of vulnerable consumers, even as it amasses billions from eager investors and regulators struggle to catch up? If you are a regular reader of Sanity, you know the answer: 'tell-us-your-deepest-darkest-secrets-so-we-could-monetise-the-heck-out-of-them', AKA mental health apps.

The digital mental health industry consists of two main kinds of companies: i) companies that use a combination of 'therapy chatbots' and human counselors, and ii) aggregators or marketplaces that match counselors with patients. They sold us an attractive story: Conventional mental health care is broken. We don't have enough psychiatrists or therapists. People can't get help because of stigma and high costs. And the solution to all this? Technology, of course.

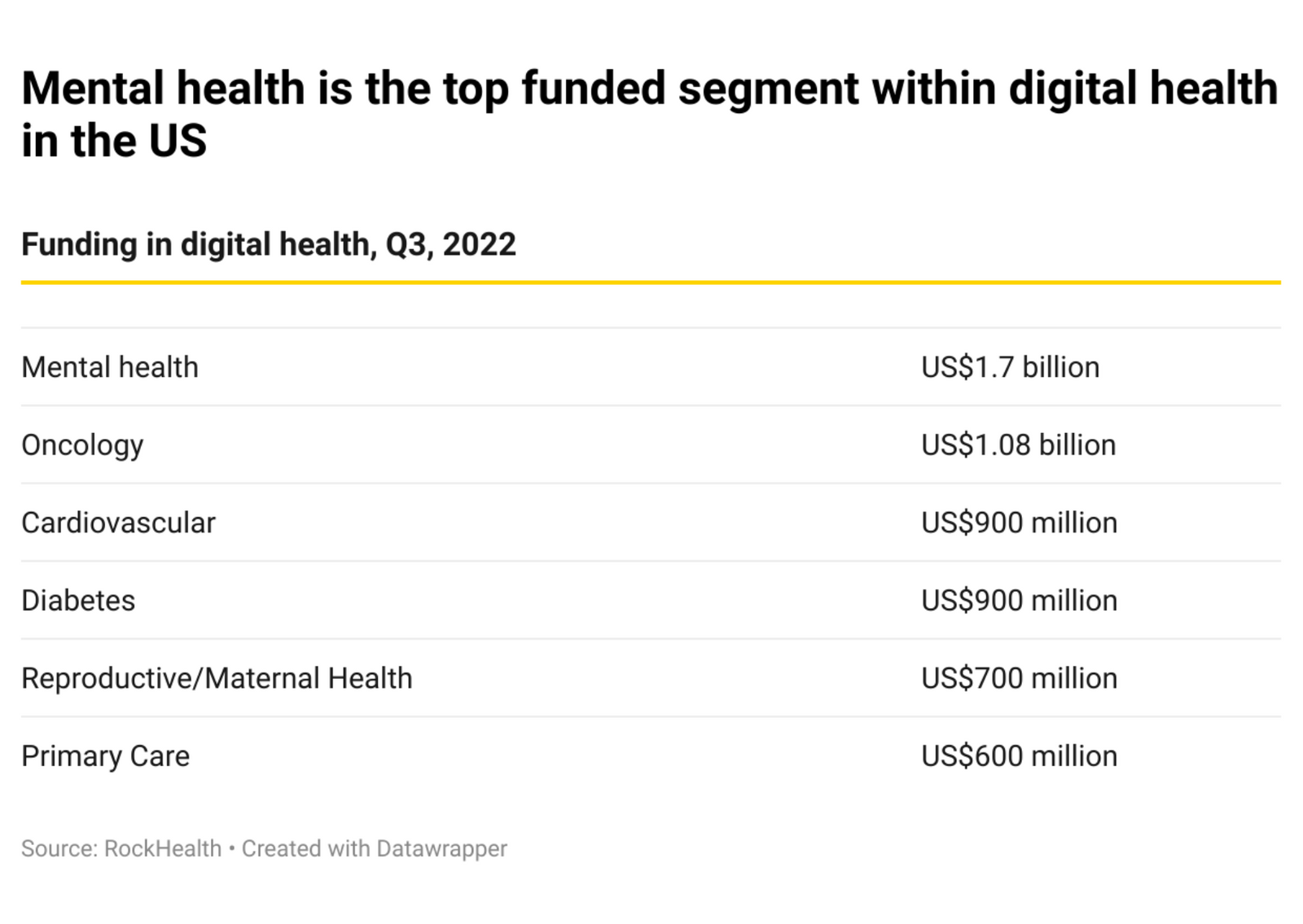

The pitch worked, creating an industry on steroids. Nobody knows the exact number of mental health apps in circulation, but one estimate puts it at over 20,000. Venture capital investments in mental health startups soared to a record US$5.5 billion in 2021 from US$2.3 billion in 2020. While digital health funding cooled in 2022 in the wake of a worldwide economic crisis, mental health sat pretty as the highest funded clinical indication, beating oncology and cardiovascular innovations. The future looks gung-ho, too. According to one estimate, the market for artificial intelligence-driven solutions in mental health is slated to balloon from USS$880 million in 2022 to nearly US$4 billion in 2027.

But those handsome numbers hide the seedy reality of the business, exposed by watchdogs such as Mozilla Foundation.

In its 2022 *Privacy Not Included report, a widely followed annual publication that aims to help people 'shop smart – and safe – for products that connect to the internet', Mozilla Foundation said that the vast majority of these apps are 'exceptionally creepy', because they track, share, and capitalise on the most intimate personal thoughts, feelings, moods, and biometric data of their users. They routinely share data, allow weak passwords, target vulnerable users with personalised ads, and feature vague and poorly written privacy policies. While claiming to offer a better alternative to conventional care, these predatory apps feed off people dealing with incredibly sensitive issues, such as depression, anxiety, suicidal thoughts, domestic violence, eating disorders, and PTSD.

If that's giving you a panic attack like it did me, you can breathe (a little). In 2023, the wild world of online mental health is showing signs of a heartening if s-l-o-w pivot to sanity, thanks to growing public pressure and some historic regulatory action.

Payback time

Exhibit A: In March, the US Federal Trade Commission issued a scathing order against one of the industry's biggest names, BetterHelp, for 'betraying consumers' most personal health information for profit'.